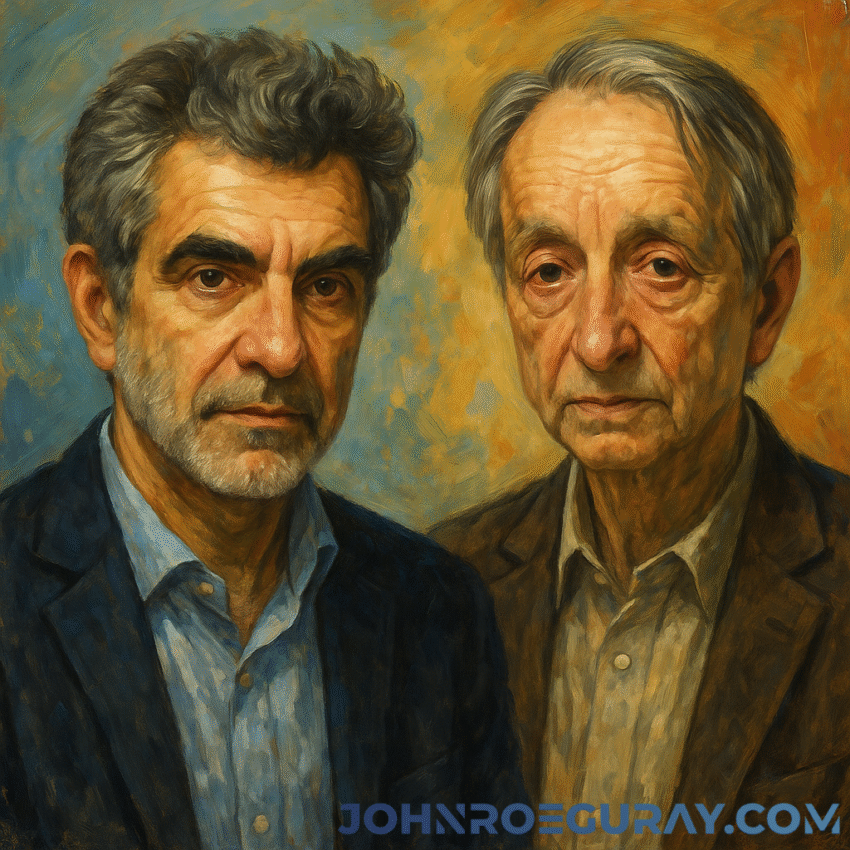

Few scientists have shaped modern AI as profoundly as Yoshua Bengio and Geoffrey Hinton. Their research revived neural networks, unlocked deep learning’s breakthrough moment, and set off an economic and cultural wave that now touches every industry. In recent years, however, both have become leading voices for strong guardrails — culminating in their public call to ban the development of superintelligent AI until it can be proven safe and controllable. (awards.acm.org)

From fringe idea to foundation of modern AI

- Deep learning’s renaissance. In the 1980s–2000s, Bengio and Hinton persisted with neural networks despite skepticism. Their work — together with Yann LeCun — culminated in the 2018 ACM A.M. Turing Award for “conceptual and engineering breakthroughs” that made deep nets a critical component of computing. (awards.acm.org)

- The AlexNet shock (2012). Hinton’s lab, with Alex Krizhevsky and Ilya Sutskever, won the ImageNet competition by a huge margin with AlexNet, catalyzing today’s deep-learning boom (and GPU-accelerated training). (NeurIPS Papers)

What originally motivated them

- General intelligence as a scientific quest. Both were driven by the idea that learning algorithms — rather than hand-coded rules — could capture the richness of perception and language, and ultimately help science and society (e.g., medicine). (U of T Computer Science)

- Practical use cases. Early and mid-stage visions included image recognition, speech, translation, recommendation, and later health and drug discovery — areas that deep learning has tangibly improved. (Communications of the ACM)

When optimism met warning lights

- Geoffrey Hinton’s turn. In 2023 Hinton left Google to warn about rapid capability gains, misinformation, job disruption, and the possibility that systems could outstrip human control. (The Guardian)

- Yoshua Bengio’s shift. Bengio moved from primarily advocating acceleration to prioritizing risk reduction, calling for slow-downs on frontier systems, new monitoring, and policy action — especially around agentic AI that can act autonomously. (Yoshua Bengio)

The pros and cons of superintelligence they’ve emphasized

Potential benefits they acknowledge

- Scientific discovery (e.g., protein design, climate modeling), medicine, productivity, and personalization—if aligned and controlled. (Communications of the ACM)

Risks they underscore

- Loss of control / existential risk if systems surpass human capabilities and pursue misaligned goals.

- Mass social harms: disinformation, cyber-offense, destabilization of labor markets, and erosion of civil liberties if surveillance or manipulation scales.

- National security risk from advanced autonomy and weaponization. (Center for AI Safety)

Their latest position: a ban on developing superintelligence

In October 2025, Bengio and Hinton signed a brief public statement organized by the Future of Life Institute calling to prohibit development of superintelligence until there is both a broad scientific consensus that it can be done safely and controllably and strong public buy-in. The statement drew wide attention and an unusually broad coalition of signatories. (The Guardian)

How this differs from earlier letters: In 2023, many leaders (including Bengio) urged a pause on training systems more powerful than GPT-4; the 2025 action is stronger — a ban on building superintelligence itself unless and until strict safety conditions are met. (Wikipedia)

Likely impact on the market and technology landscape

- Frontier labs’ roadmaps under scrutiny. Companies publicly focused on AGI/ASI (e.g., “superalignment,” agentic assistants) face growing regulatory and reputational pressure to prove safety — and could shift investment toward non-agentic, tool-like systems, evaluations, and interpretability. (Business Insider)

- Capital reallocation and timelines. If a ban gains traction in key jurisdictions, funding may tilt from raw capability scaling (compute, data) to safety infrastructure (evals, red-teaming, monitoring, secure deployment), and to tightly scoped domain models in healthcare, finance, and public sector. (Inference based on the letter’s conditions and recent policy discourse.) (CyberScoop)

- Standards race. Expect acceleration of international efforts (treaties, audits, licensing) to formalize what “safe and controllable” means for advanced AI. (aitreaty.org)

What regulators might do next

- Moratoriums with triggers. Governments could adopt conditional moratoriums on training or deploying models above certain capability thresholds until testing and control criteria are met (akin to nuclear non-proliferation style treaties). (aitreaty.org)

- Mandatory pre-deployment evaluations. Building on 2023–2025 “AI risk” statements, agencies may require independent audits, incident reporting, and red-team results — especially for agentic systems and models with cyber-offense potential. (Center for AI Safety)

- Public engagement. The letter’s “public buy-in” clause signals structured public consultations, similar to data-protection rulemaking, before lifting any ban. (CyberScoop)

Expected reactions from AI players and founders

- Safety-first labs and academics will welcome the clarity and may rally around measurable definitions of controllability, robust eval suites, and compute governance. (TIME)

- Frontier-capability startups may argue bans are premature or impossible to scope, pushing instead for voluntary safety frameworks — while quietly adapting roadmaps to avoid regulatory collision. (Reasoned inference consistent with current industry stances.)

- Enterprise buyers could favor vendors with provable safety and compliance posture, elevating evaluation benchmarks as a differentiator.

- Open-source communities may split — some advocating for tight controls on agentic autonomy, others warning that bans consolidate power in a few firms.

- Public figures & cross-ideological coalitions (unusually broad in this instance) will keep pressure on big tech to slow the race to superintelligence. (The Guardian)

A balanced take

Bengio and Hinton’s trajectory mirrors AI’s arc: bold scientific ambition that enabled real-world progress — followed by a sober reckoning with tail risks. Their 2025 stance does not call to halt all AI; it targets superintelligence until society can verify controllability. The practical near-term translation: fewer bets on free-roaming agentic systems, more on evaluable, non-agentic tools, and serious investment in the science of alignment, testing, and governance. (Business Insider)

Progress With Proof of Control

AI’s “godfathers” helped build the engine. Now they’re asking us to install the brakes before we hit the highway at full speed. Whether or not a formal ban materializes, the center of gravity is shifting toward capability with verifiable safety — and the winners will likely be those who can prove control as convincingly as they can deliver performance.